There is no shortage of stories about tech founders achieving face-melting wealth from startup success. Bless their hearts.

On the other side are stories with unhappy endings of founders sacrificing everything for the sake of their startup. I hate those stories.

This story lies smack-dab in the middle. There is zero money made and minimal money lost. This is a story of how I had an idea that I was excited about, pursued it for 6 months, and then decided to pull the plug and get a job.

The Idea

As I wrote previously, I spent the first half of 2019 freelance data science consulting. I had always wanted to “do” a startup, but I had not had any good ideas (a surplus of bad ideas, though!). One of the reasons that I had wanted to do consulting was to get a broader view of the industry, and I hoped this expanded view would reveal some holes that a startup could fill.

Well, I got an idea stuck in my head. It was so stuck, that I had a hard time finishing contracts that I had started. I turned down new work, let my contracts expire, and decided to work full time on this idea.

The easiest way to describe this idea is in classic startup parlance: I wanted be “Datadog for machine learning”. Like Datadog, I envisioned creating a simple (from the outside) monitoring service. This service would track the performance of machine learning models. By performance, I mean the quality or accuracy of machine learning model predictions.

For those unfamiliar with machine learning, monitoring these systems is a bit more complicated than conventional DevOps monitoring. With something like Datadog, one typically logs scalars (i.e. single numbers) at single points in time associated with some categories, tags, or dimensions. For example, you may log the CPU usage percentage (the scalar number) for all of your Payment API hosts in the US (where API-type and country are dimensions). With machine learning, you typically have at least two scalar value events that occur at separate points in time, and you have to deduplicate, aggregate, and join these events.

Let me illustrate with an example. At my last job, we would send customers a box of clothes, sight-unseen. The customer could keep and pay for whichever items they liked and return the ones that they did not like. I built a model to predict the probability that a customer would keep an item of clothing. We had human stylists who used an internal, ecommerce-like website to search our inventory and virtually pick garments to send to the customer. My algorithm was deployed as an API, and it would generate predictions every time the inventory was searched. We logged these predictions via an event tracking system. A week or two after the clothes were sent, we would find out which garments the customer kept. This information was stored in our transactional database. In order to determine the accuracy of my algorithm, I had to query two different databases, deduplicate prediction events, join data in Python, and handle a number of logic and time-dependence details.

While this monitoring process was certainly doable, it was certainly annoying, as well. First and foremost, I wanted to build a service that made this whole process extremely simple. Simpler than writing my own ETL pipelines. It’s enough work to get a model into production – monitoring should be almost free to tack on. Secondly, I wanted to be able to interactively slice and dice my model’s performance across a range of dimensions over time. How do new customer’s predictions compare to recurring customer’s? How have these two segments’ predictions changed over time?

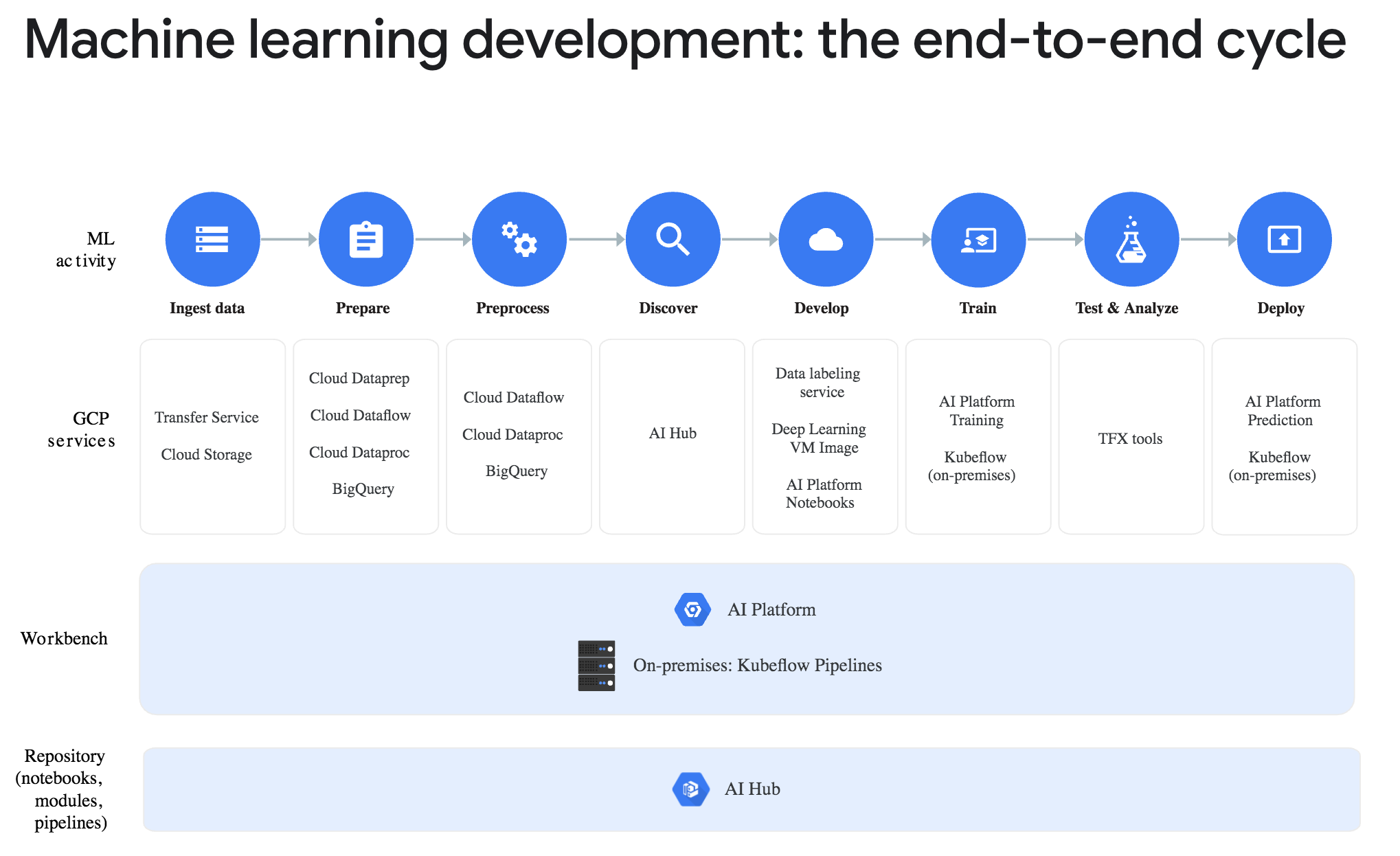

At that job, we had an excellent Data Platform team that had built out tooling to make it easy and automated for data scientists to train models in the cloud and deploy these models as discoverable APIs. There now exist a bunch of vendors and managed services that do this, too. What I saw was missing was everything that occurs after deployment. Like, if you are going to let the robots make the decisions, the least you can do is keep an eye on them. I got particularly excited when I looked at the Google AI Platform page and saw this diagram that literally stopped at Deploy. It seemed like there was space for a new product.

Google AI Platform offerings as of January 2020

The Approach

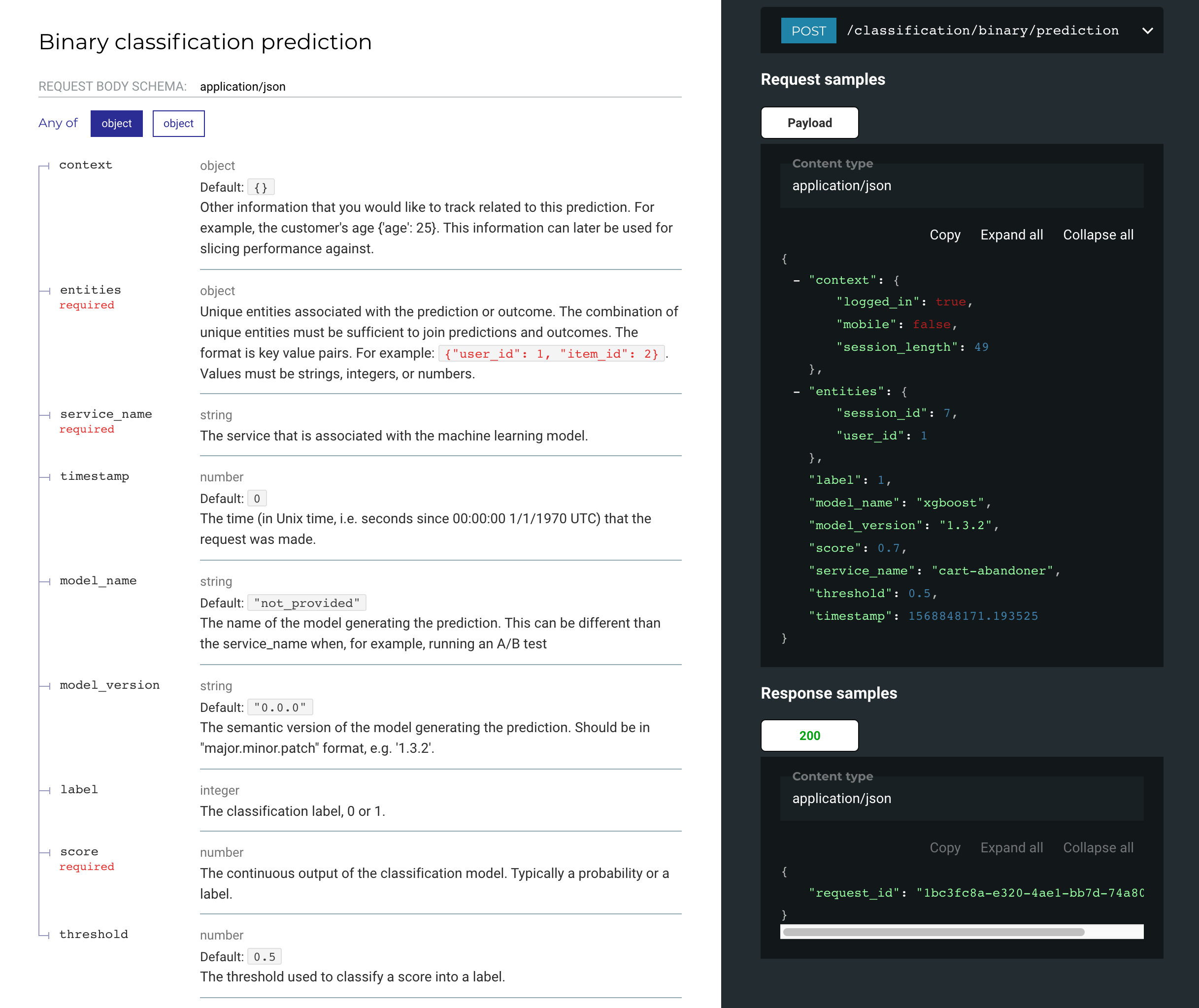

As I mentioned, I wanted to build something that was simpler for a data scientist to use than writing their own ETL. The simplest thing that I could imagine was a public-facing API. I built endpoints for a bunch of common machine learning models:

- Binary and Multiclass Classification

- Single output and Multioutput Regression

- Time Series Forecasts

- User-to-Item and Item-to-Item Recommendations

Each type of machine learning model had a prediction and outcome endpoint, like

/classification/binary/prediction/classification/binary/outcome

Anytime the user’s model generated a prediction, they would POST some semi-structured JSON to my API. Anytime an “outcome” occurred (e.g. a purchase, a click, etc…), they would POST similar semi-structured JSON to my API. I would then handle the ETL of deduping, aggregating, and joining these events. Because I knew the type of machine learning model the user was tracking, I could calculate all of the relevant performance metrics out of the box (e.g. accuracy, precision, and recall for a classification model).

The Vision

As someone who follows people like Patrick Mackenzie and Tyler Tringas, I am predisposed to look for simple SaaS businesses. This idea checked off all of the boxes for a company that I was interested in building.

✅ High-margin SaaS business.

✅ Solves an actual problem.

✅ B2B, so potential customers actually have money.

✅ Seemingly bootstrap-able.

✅ I had the capacity to figure out how to do everything myself.

In addition to the above, as I thought more and more about this idea, I envisioned all of the places that this product could go. Monitoring ML models is simply the entry point to a world of possible features and products! Intelligent alerting, root cause analysis, logging features at the time of prediction to build perfect training data, backtesting, a compliance audit log, fairness accountability, automated performance improvement suggestions, and so on. I could foresee 5 years of work towards building that grand vision. It was this excitement that pushed me to work on this full-time.

The Progress

Like a good student of Silicon Valley, I worked hard to build a Minimum Viable Product (MVP) and get it in front of potential users.

I had started working on this problem part-time around May 2019 and switched to full-time in July. By mid-August, I had a proper MVP consisting of a public-facing API, ETL pipelines, and a UI consisting of both a conventional SaaS CRUD app and interactive dashboards.

The MVP was ugly and feature-incomplete. I gritted my teeth and showed it to people. I spent mid-August to mid-November pitching the product to companies, getting rejected, and working on the product. My goal was to determine whether it was possible to build a general product to monitor 80% of peoples’ ML models, or if everybody’s ML systems were so bespoke that custom monitoring solutions would have to be built for each one. I still have not answered this question.

API Docs

The Problems

So what went wrong? Other than the product, the market, and the fit, everything was great 🙂.

The Market

I talked with companies at many stages of maturity and found as many reasons why my product was not right for them:

- Some companies are trying to get anything into production. The last thing they care about is post-deployment monitoring.

- Some companies have a couple ML models, and they manually run custom monitoring ETL/SQL jobs for each model. They are fine with this. Their ML models are not mission critical.

- Sometimes companies automate their custom ETL jobs, and they are even more fine with this.

- Some companies have multiple ML models, but they are mission critical. Due to their importance, the companies have already built out robust monitoring.

What’s the ideal company, then? Probably a company with lots of ML models and a growing team of people building them. Say, 7+ models and 5+ data scientists? As this team scales, a centralized, common monitoring platform becomes important. Ideally, the company should be deploying “important” models that either impact revenue or carry risk.

I found a couple ML-heavy startups which had large-ish data teams, but they often had relatively unimportant models. Or, and this is key, they were perfectly happy to write custom monitoring jobs for each model. After all, if you are savvy enough to deploy an ML model, you are probably more than capable of deploying a job to monitor it.

I also started thinking about what other types of companies would match my ideal, and I realized that enterprises likely have large data teams with potentially important models (think: banks). These enterprises have also probably been sold vendor solutions for handling general training and deployment. Of course, if you already have a vendor solution for training and deployment, it’d be a lot easier if that solution also included monitoring…

The Product

As mentioned, I did find some ML-heavy startups that seemed like potential candidates. Many had significant concerns about sending me their data. They wanted to deploy my system within their own cloud. I ignored this for a while. After all, companies like Segment are current unicorns, and other companies have given them carte blanche to suck up all the proprietary data they want. Hell, Customer Data Platform is a thing.

Besides sending me data, companies had other requests of the product. Many were solvable. Companies wanted a batch upload option rather than API access. Fine, this is easy. Some companies wanted a Kafka consumer or an SDK to interact with the API. This can be done. But, some companies had gnarly logic around filtering out certain ground truth data. They were already doing this for their ETL jobs to populate their data warehouse, and they would potentially have to duplicate all of this for my service. Or, companies wanted to be able to join a lot of other, “contextual” data, and it did not make sense to send this via API. Solvable problems, but… the path forward was less clear.

The Fit

So what to do? One potential pivot to overcome customer objections was to change my product to deploy within the customer’s cloud. My existing product relied heavily on AWS managed services which would make this difficult. It was hard enough maintaining everything in my own cloud, as I was constantly changing stuff as I tried to figure out the right things to build and the best schemas to use. Some things were automated, some weren’t. The thought of transferring my heavy iteration from my own, single (though multi-tenant) product to multiple, individual products in customers’ clouds was… daunting.

Another pivot? Focus on enterprises, where there is a clear need. That is a world that I know nothing about and have no desire to play in. It would also still require deploying within the customer’s cloud (or data center 😬).

Lots of people wanted an all-in-one solution containing training, deployment, and monitoring. That space is crowded.

Is there space for a horizontal monitoring product? I still think yes, but the market is small right now (though likely bigger on the west coast than here in NYC). I do think that this market will grow. As it becomes easier and easier to deploy models, data teams will inevitably have to deal with the headaches of monitoring a slew of services; but, I think we’re a little ways out from that world.

The Money

I talked with people who had money to invest or knew people with money to invest. I could probably have raised a couple hundred $K pre-revenue. I have academic and professional credentials which matter to some people. Furthermore, you could imagine a pitch involving an AI monitoring system that would use AI to diagnose the AI that it was monitoring. And the AI doing the diagnosing would itself be monitored in a glorious dogfooding ouroboros that would leave investors salivating.

And yet, I was very, very hesitant about taking money. First of all, I didn’t really need it. I had basically made an annual data scientist salary in half a year of consulting, so I had some runway. Furthermore, I did not really need to hire anybody. Sure, I had a lot to learn in building my product, but it was all well within my wheelhouse.

At some point, I conceded that hiring people would be immensely helpful once I got a couple companies on board. It seemed unlikely that I would be able to pay myself a proper salary let alone a team of developers with only a couple customers (we’re not even going to get into pricing in this post…). And so, investor money would be a nice way to get myself and an eventual team proper salaries during growth phase.

But also, growth phase 😬. Once you take that money, you start the treadmill. You are typically taking the money because you would like to spend more than you are bringing in. At a SaaS company, the great margins mean that the vast majority of expenses are peoples’ salaries. The moment you take that money and hire people, you are now sprinting towards revenue and/or the next funding round because otherwise you have to fire people. I am not a fan of sprinting.

I did get some advice to just try to raise some money, pay myself a salary, hire some people (because it’s lonely as fuck by yourself), work on the product, talk to companies, try to get some revenue, and then raise a Seed round.

Philosophically, I had issues with trying to raise money pre-revenue. I wanted to build something that people wanted. Any revenue is a sign of some market need. It seemed like that was the least I could do before I beg investors for money.

The Stoppage

In the end, I had talked with a lot of companies and was failing to get a single one to integrate with my service. I even tried offering it for free to no avail. They had many good reasons for not wanting a service like mine, and I was starting to agree with them. The current, local market prospects looked bleak. I could probably have raised some money, though not a Seed round. It was looking like 1-2 years of begging for money, scraping by, pivoting the product around to either match the market or wait for the market to catch up. And you know what? I decided I didn’t want to do that.

Maybe if I was younger, with fewer “extracurricular” commitments, then I could do that grind. Actually, I know that I could do that grind – I got a PhD in my 20s, spending 6 years in NYC on a $30K / year stipend. Life got considerably better when I graduated and got a job. I worked regular hours and made significantly more (have I mentioned how crazy tech salaries are?), and we all know that lowering your standard of living sucks.

I think that you have to believe so strongly in your startup idea that you will do almost anything to get it to work. If VC money is the surest way to get you towards your goal, then great, you do that. If you are going to have to grind for a couple years, then so be it. If you have to take a lifestyle cut, then that’s a means to the end. I think I had this conviction when I started with the idea. I no longer did.

After much existential straining, I decided to pull the plug in mid-November. It was sad, but it felt pretty good. It was a little like when I decided to quit Physics, but easier.

The Experience

I am thankful for this experience. I learned a hell of a lot. I had little straight-up “data engineering” experience prior to this, and I feel like I got to do a 6-month residency. In fact, I did such little “data science” during this journey that I was nervous to interview for data science jobs after I quit. Did I still know that stuff?

It was also super helpful to actually build a CRUD app. As a data scientist, I have often consumed data created by CRUD app developers, and I have cursed them for constantly overwriting rows of a database and ruining historical queries. I have a lot more empathy for them now.

I talked with a lot of people and learned a lot about the industry. It’s comforting to know that everybody is still trying to figure this all out, though sometimes I wish that I could discover some real magic under the hood at just one company.

With all that said, this experience was much harder than I would have expected, and I quit so early! It is really difficult to work by yourself, day-in and day-out. A co-founder would have helped, immensely. Constant rejections from product pitches is an impressive confidence destroyer. Combine this with coding by yourself, where you get stuck on everything, and you’ve got a cocktail of misery.

When I would talk about the difficulty of the experience to other people, they were all extremely supportive and encouraged me to keep going. On one hand, this is nice and supportive and everything else you would want. On the other hand, they are not the ones who have to live the experience. Sometimes I would wish people would stop being supportive and instead encourage me to quit.

The End?

Since I pulled the plug, Arthur AI raised a healthy Seed round. They have a platform for monitoring machine learning models in production. They appear to focus on enterprise clients. AWS also released a monitoring component to Sagemaker. It looks like there are a couple other startups in this space, too. I’m excited to see what happens.

After freelance consulting and grinding on this startup, I was tired. I took a job at a large, stable company. I shutdown most of my running systems on AWS that were costing me fixed money. All of the code sits in a couple repos on GitHub. I might leave it there. It would be cool to open source it, as a single-tenant solution that can be deployed within ones own cloud. This requires refactoring of the code and the removal of AWS managed services. Even so, the system has never really been tested at a company, and there are definitely some missing pieces. For now, I am going to enjoy my time off before I start my job.